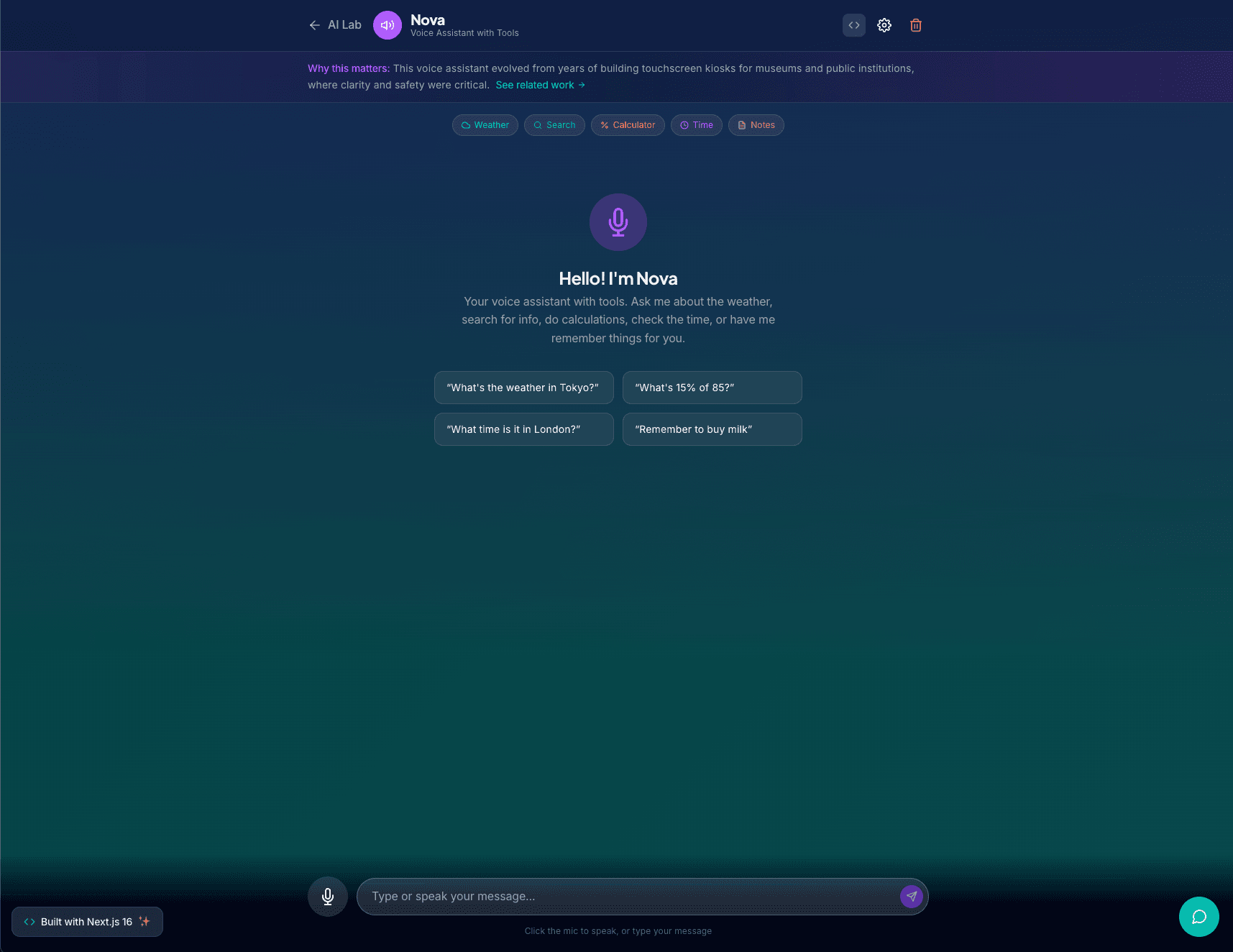

Voice Assistant with Tools

A conversational AI agent that responds to natural voice commands through browser-based speech recognition. It can search the web, check weather, perform calculations, tell time across timezones, and remember notes. Responses stream back as text and can be read aloud using OpenAI text-to-speech voices.